User testing our own website

At Atomic Smash, we practise what we preach. We chose to B2B user test our own website to identify opportunities that could strengthen our sales proposition and user experience.

We believe that user testing is the single most valuable method for collecting qualitative feedback on your website. When we wanted to test and strengthen the proposition and user journeys on our own website, it was our first port of call.

What is user testing, and why do it?

User testing helps you gather feedback on your website, so you can roll out enhancements that improve the user experience and make it more effective.

Typically you’ll hear directly from a small focus group, representative of your target audience, to identify issues or friction points relating to:

- usability

- functionality

- design

- messaging

- the overall user experience

You might ask participants to complete tasks, like navigating to a specific page, and you’ll observe and record their interactions with your website.

You can conduct sessions in a variety of ways to suit your needs. For instance, in-person or remote; moderated or unmoderated; and with support from a testing platform or an agency.

Usually, users are asked to vocalise thoughts and experiences, and rate the difficulty of specific tasks.

Insights gathered through user testing can be interpreted alongside quantitative data such as your website analytics, wider user research, and UX principles, to produce a clear and strategic roadmap of website improvements that are based on data rather than guesswork.

User testing plays a crucial role in optimising your website to better meet user needs, improve conversion rates, and enhance overall user satisfaction.

“The benefits of user testing are plentiful, from uncovering customers’ needs and challenges to designing better solutions, making faster decisions, and encouraging a customer-first approach, to name a few.”

The value of user testing

We ran user testing through our own organisation as if we were working with a client. Before kicking off, we took the time to make a business case for user testing, and to demonstrate how we would ensure it was a good use of our resources.

Our main goals were to:

- Revisit the proposition on our homepage to improve clarity

- Discover how easily prospects navigated through the site

- Use our observations to build out a roadmap of improvements to increase conversions

We tested with users who fit our buyer personas exactly, so we could gather relevant feedback on proposition, design, functionality and clarity of messaging.

Designing improvements specifically in response to what our target audience wants, needs, and how they behave, ensures the work we do is valuable. All of this contributes towards the wider commercial goal to increase conversions through our website.

How user testing feeds into future strategies

This blog post is about user testing, but first, let’s take a few steps back. Before user testing took centre stage, we tasked our Insights team with helping us plan out strategic improvements to enhance our website.

We wanted to feed our backlog with web design and development tasks that would improve and optimise the digital experience for our target audience. This is an ongoing process we call Always Evolving®.

Just like we would with any real client, we talked through business goals and challenges so that our Insights team could recommend (and implement) the best research methods for us.

Most importantly, our data showed that we were receiving high numbers of leads and enquiries from prospects who weren’t a great fit for us. This indicated that our key messaging and services weren’t clear enough for our website visitors.

We also wanted to see how users navigate across our site, discover which sections and web features people like (or don’t), and check how easy it is to get in touch with us.

On top of this, we also wanted to explore and trial some new ideas that we later intend to roll out across our other client websites – our own website is a safe environment to experiment first!

Your turn for user testing

Get your website next in line for user testing. Talk to us about our services.

Leaning on Insights

Our Insights team combined qualitative and quantitative research to better understand user behaviour on the Atomic Smash website.

User testing was the most prominent element, but we supplemented this with GA4 analysis. For instance, through path exploration of our website, and looking at bounce rate and website exits, which were too high on the homepage.

This data analysis, along with team review and discussion, gave us hypotheses to validate through user testing.

How we conducted user testing

Our Insights team conducted remote, unmoderated B2B user testing. Users were set a number of prescribed tasks to complete in their own time, with their screen recordings and audio made available to us afterwards.

We created a scenario where the user is a prospect who is seeking a supplier and wants to find out more information about what we do, then submits an enquiry.

The user was guided through this with a series of tasks, and they were prompted to verbalise their thoughts and rate the difficulty of each task as they completed them.

Our Insights specialists undertook a detailed analysis of the recorded user testing sessions, creating a spreadsheet matrix to identify common experiences. They later presented consolidated feedback with a list of recommendations to me (as “the client”).

Objectives

- Review the Atomic Smash website experience and get feedback from our target user audience to identify points of friction along the conversion path

- Find out what users think about our messaging and proposition

- Observe users and uncover usability problems while interacting with the website

- Understand where we can improve design, messaging and UX to better convert qualified prospects

Recruiting participants for user testing

We screened participants based on characteristics that brought them as close to our target audience personas as possible, such as technology platforms, location, age range, and role seniority.

We also split testers across device types, but with a higher proportion of desktop testing compared to mobile testing (reflecting the split of how people use our site).

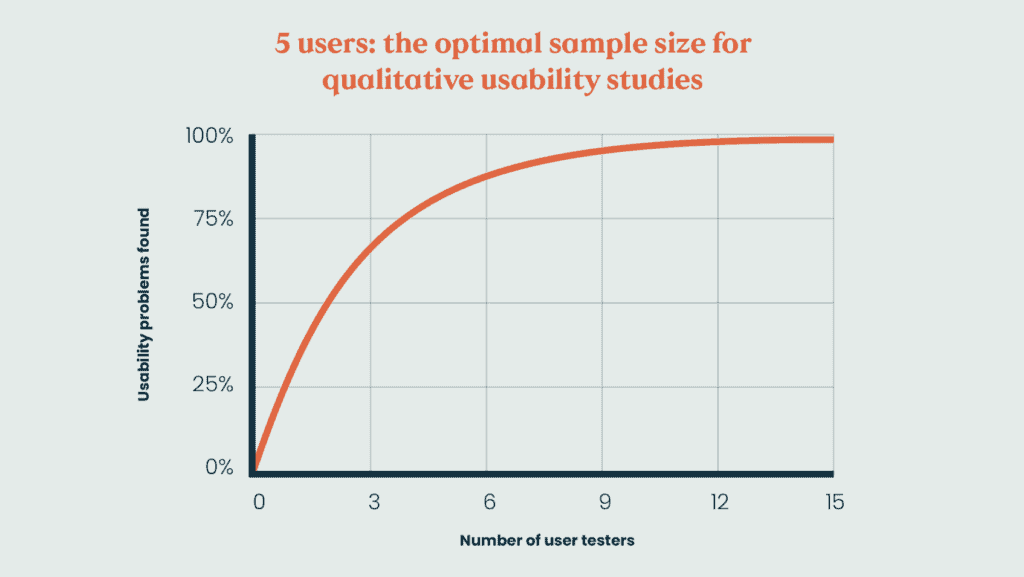

There’s a common misconception that user testing is costly and/or time consuming. In reality, we don’t test with more than 5 users per device type, following the Nielson Norman Group framework for the optimal qualitative results from usability studies.

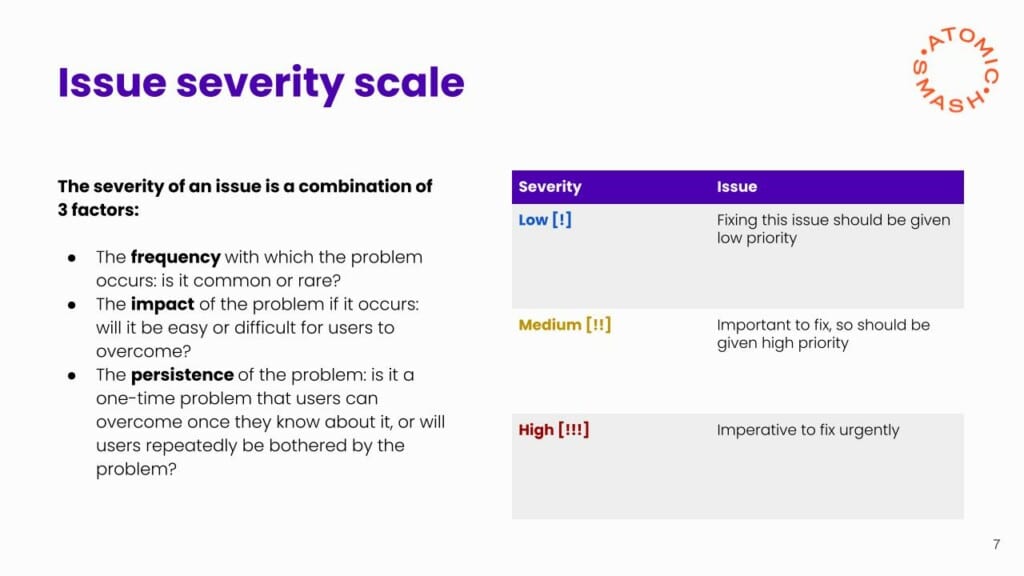

Rating the importance of issues

Our Insights team used a scale to prioritise the severity of the issues raised, designed by Nielson Norman Group, and based on the following 3 factors;

- The frequency with which the problem occurs:

Is it common or rare?

- The impact of the problem if it occurs:

Will it be easy or difficult for users to overcome?

- The persistence of the problem:

Is it a one-time problem that users can overcome once they know about it, or will users repeatedly be bothered by the same problem?

The highest proportion of issues were medium, the fewest were rated high. This was later helpful for us to prioritise improvements and plan them into a realistic and manageable roadmap.

Analysis of feedback

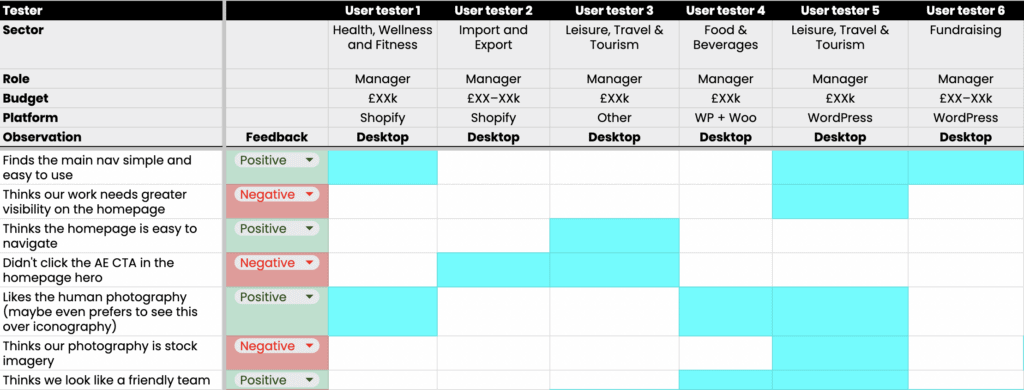

User testing feedback was first analysed through detailed spreadsheets, here’s a representation with some omitted user testing data:

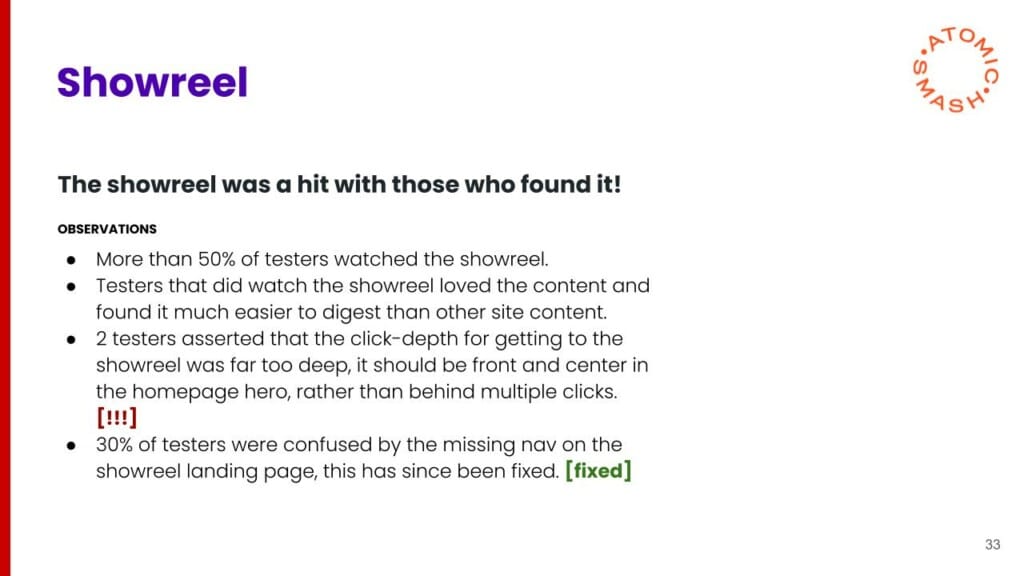

And then the Insights Team pulled together key findings into a client-ready slide deck. Here’s one of the slides:

What did we learn?

On the whole, feedback on the Atomic Smash brand was very positive.

Our testers correctly assumed the size of the agency and felt that we looked like a young and friendly team. They felt we demonstrated experience and expertise, and saw us as trustworthy because of the transparency around our results and price-points.

Positive feedback

Examples of what we learnt

- We were able to identify the messaging that chimed with users, in particular: “You’ll never need a new website again”

- Our showreel was a major hit! So we looked into how we could make it even easier to access

- Participants were clear about how to make an enquiry, understood the platforms that we work on, and saw the value in our retainer services and proposition

Positive feedback shows us what we’re doing well, which is helpful, but finding clear areas for improvement is invaluable. User testing has also shone a light on the main points of friction in the usability of our website.

Areas for improvement

Examples of what we learnt

- A clear trend in the quantitative data helped us identify a gap in the journey to get information about our most prominent service

- Qualitative feedback highlighted that our proposition could be clearer and more concise; we needed to better explain what we do and how we achieve it

- User journeys were compromised by the site navigation structure – some pages could be removed (to reduce confusion or friction), combined (to be clear and concise), or elevated (to provide a more holistic feel of the agency)

Putting it into action

Following user testing, we rolled out some improvements rapidly with design and development tasks pushed through Always Evolving® – the monthly retainer for website enhancements we implement for our own website, just like we do for clients. This included:

- Redesigning the homepage hero to put our showreel front and centre

- Simplifying our proposition and leaning into messaging and CTAs that sparked interest with user testers

- Rethinking how we present services on the homepage and preparing a new section with improved clarity through design and copy changes

We turned around these improvements within one quarter.

Takeaways

Usability testing is the single best way to get qualitative feedback on your site. It’s a key activity you should invest in. And you can work with experts (like the team at Atomic Smash) to have user testing, analysis and a roadmap of improvements rolled out rapidly.

Let’s get testing

Ready to level up your website? Let’s experiment to find the best path forward.